Jason J. Corso

| Snippets by Topic | |

| * | Active Clustering |

| * | Activity Recognition |

| * | Medical Imaging |

| * | Metric Learning |

| * | Semantic Segmentation |

| * | Video Segmentation |

| * | Video Understanding |

| Selected Project Pages | |

| * | Action Bank |

| * | LIBSVX: Supervoxel Library and Evaluation |

| * | Brain Tumor Segmentation |

| * | CAREER: Generalized Image Understanding |

| * | Summer of Code 2010: The Visual Noun |

| * | ACE: Active Clustering |

| * | ISTARE: Intelligent Spatiotemporal Activity Reasoning Engine |

| * | GBS: Guidance by Semantics |

| * | Semantic Video Summarization |

| Data Sets | |

| * | A2D: Actor-Action Dataset |

| * | YouCook |

| * | Chen Xiph.org |

| * | UB/College Park Building Facades |

| Other Information | |

| * | Code/Data Downloads |

| * | List of Grants |

Multi-Class Label Propagation in Videos

The effective propagation of pixel labels through the spatial and

temporal domains is vital to many computer vision and multimedia

problems, yet little attention has been paid to the temporal/video

domain propagation in the past. We have begun to explore this

problem in the context of general real-world "videos in the wild"

and surveillance videos. Our current efforts primarily focus on

mixing motion information with appearance information

Previous video label propagation

algorithms largely avoided the use of dense optical flow estimation

due to their computational costs and inaccuracies, and relied

heavily on complex (and slower) appearance models.

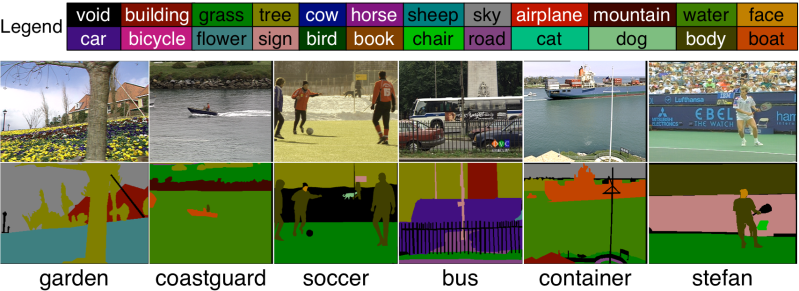

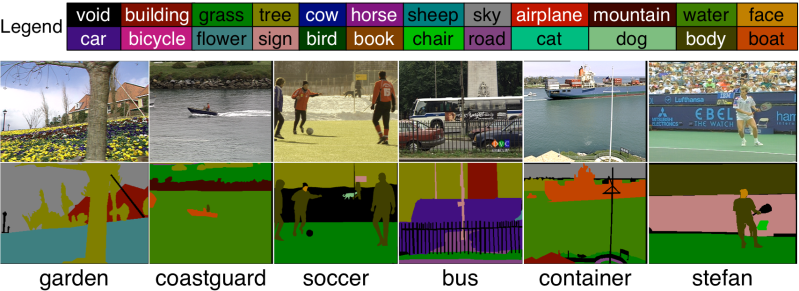

Label Propagation Benchmark Dataset

We used a subset of the videos from xiph.org as the basis of our benchmark

dataset for label propagation. Existing datasets either restricted

the study to two classes or were taken in restricted settings, such

as from the dash of a moving vehicle. Our new data set has general

motion and presents stratified levels of complexity. We continue to

add to the labels and will release additional videos in the future.

For more information, see Albert

Chen's page.

Download the full data set.

Code

Code from our WNYIPW paper is here

with the config

file. Or you can get the dataset above and the code is in the

package.

If you use the dataset or the code, the associated cite is below.

If you use the dataset or the code, the associated cite is below.

Publications

| [1] | A. Y. C. Chen and J. J. Corso. Propagating multi-class pixel labels throughout video frames. In Proceedings of Western New York Image Processing Workshop, 2010. [ bib | .pdf ] |

Acknowledgements

This work is partially support by NSF CAREER IIS-0845282 [project

page].