Abstract

We consider image transformation problems, where an input

image is transformed into an output image. Recent methods for such

problems typically train feed-forward convolutional neural networks using

a

per-pixel loss between the output and ground-truth images. Parallel

work has shown that high-quality images can be generated by defining

and optimizing

perceptual loss functions based on high-level features

extracted from pretrained networks. We combine the benefits of both approaches,

and propose the use of perceptual loss functions for training

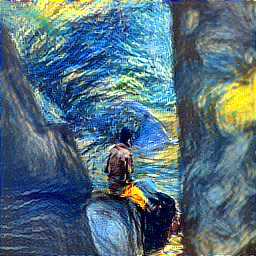

feed-forward networks for image transformation tasks. We show results

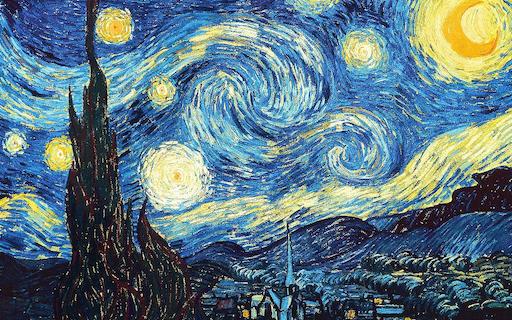

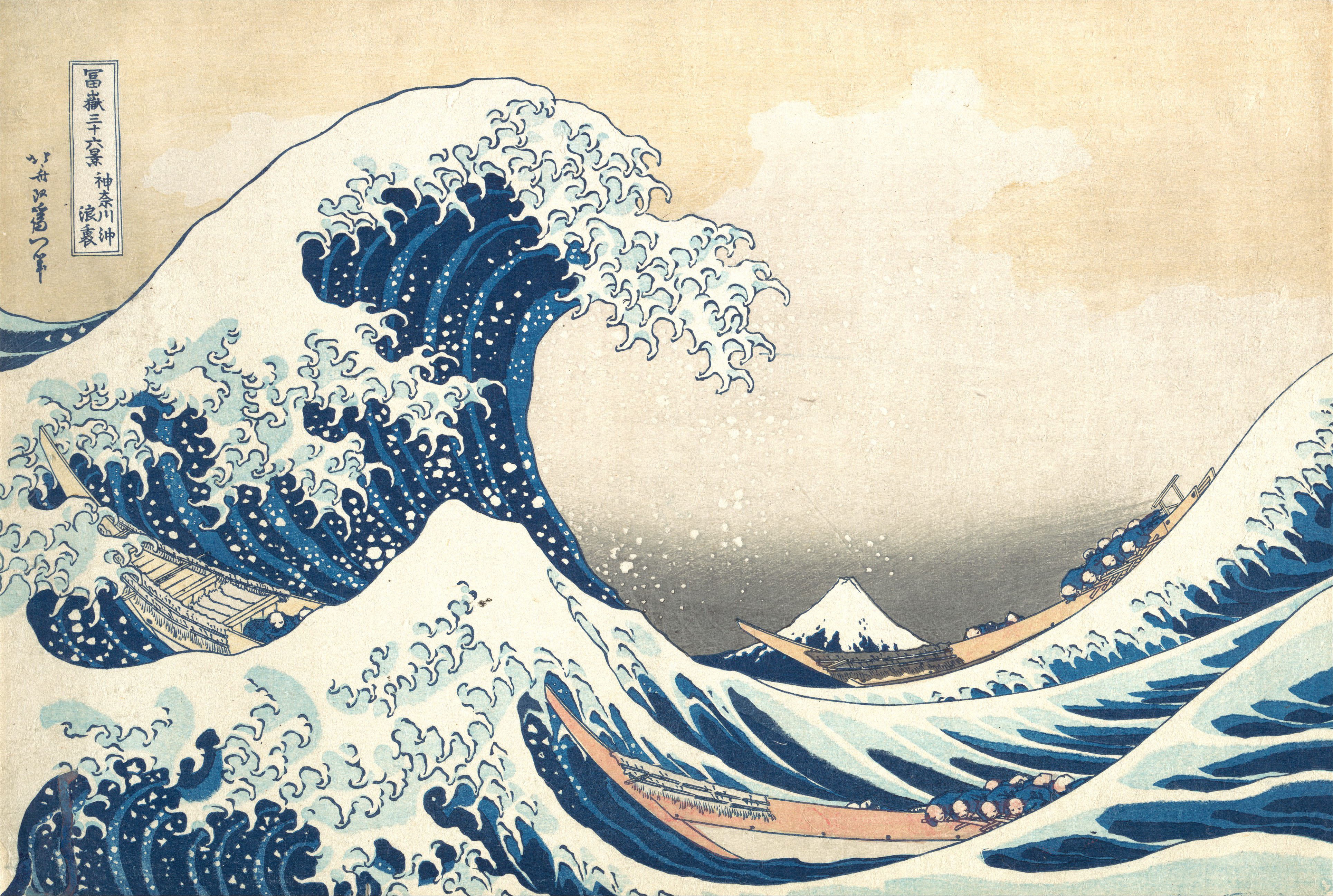

on image style transfer, where a feed-forward network is trained to solve

the optimization problem

proposed by Gatys et al.

in real-time. Compared to the optimization-based method, our network gives

similar qualitative results but is three orders of magnitude faster. We also

experiment with single-image super-resolution, where replacing a per-pixel loss

with a perceptual loss gives visually pleasing results.