Action Bank™

Information and Code Download

Jason J. Corso

| News/Updates | |

| Overview | |

| Code | |

| Processed Data Sets for Download | |

| Benchmark Results | |

| Publications | |

| FAQ / Help |

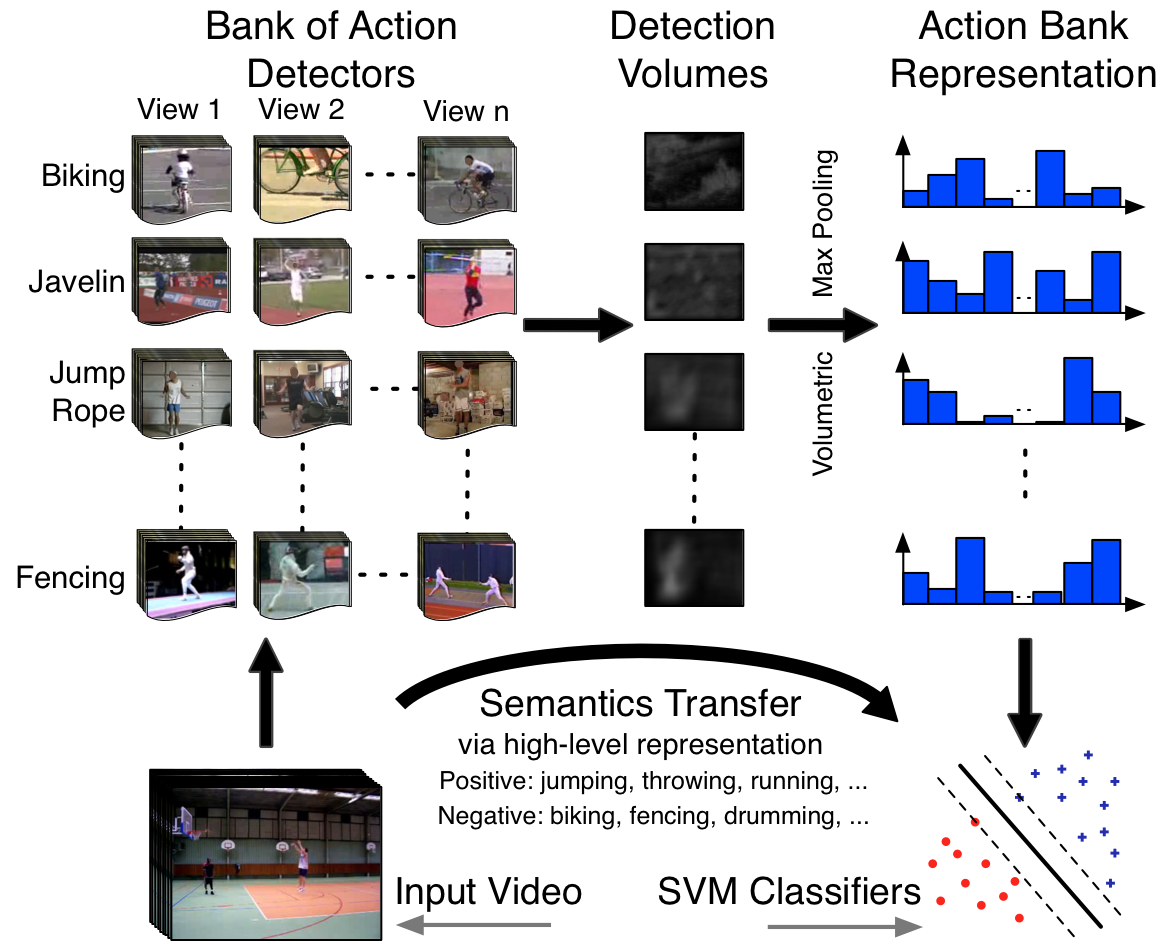

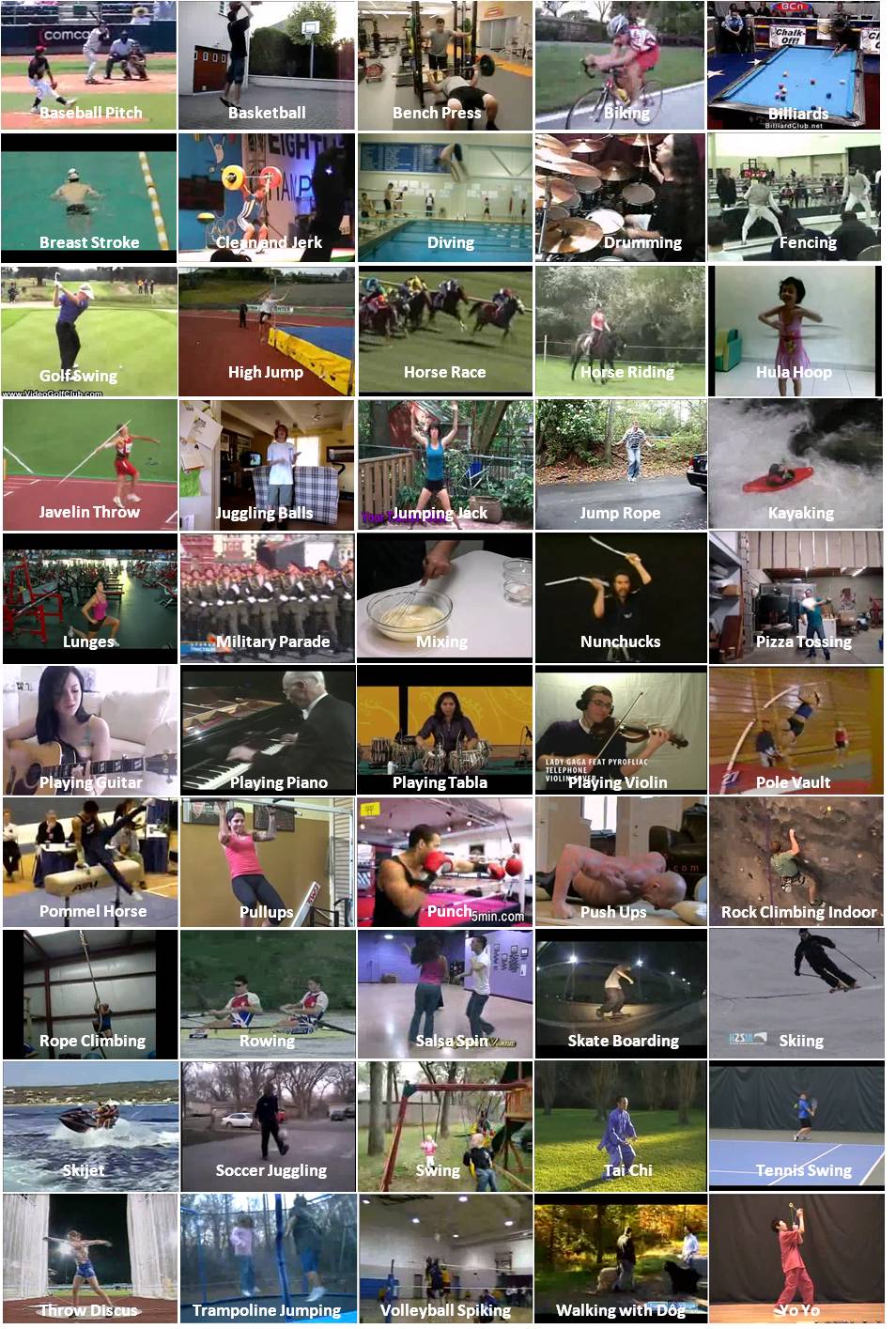

Action Bank™: A High-Level Representation of

Activity in Video

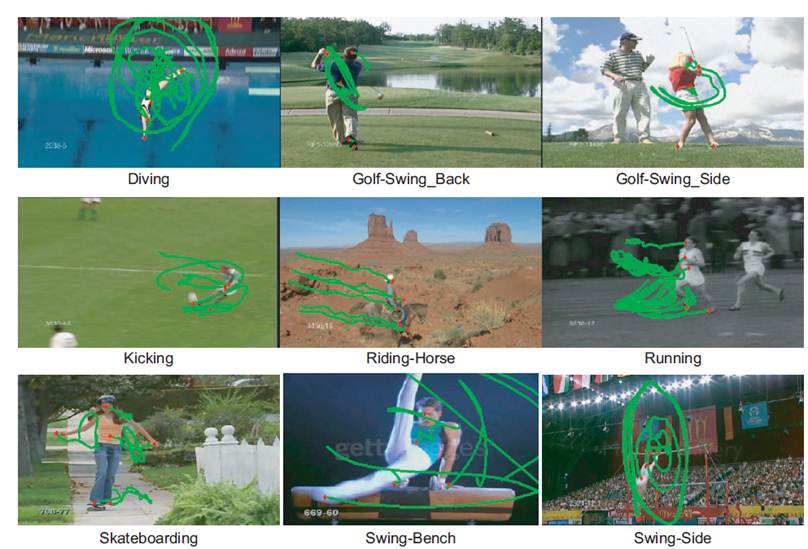

Human motion

and activity is extremely complex. Most promising recent approaches

are based on low- and mid-level features (e.g.,

local space-time features, dense point trajectories, and dense 3D

gradient histograms). In contrast, the Action Bank™ method is a new

high-level representation of activity in video. In short, it embeds a

video into an "action space" spanned by various action detector

responses, such as walking-to-the-left, drumming-quickly, etc. The

individual action detectors in our implementation of Action Bank™ are

template based detectors using the action spotting work of Derpanis et

al. CVPR 2010. Each individual action detector correlation video

volume is transformed into a response vector by volumetric max-pooling

(3-levels for a 73-dimension vector); in our library and methods

there are 205 action detector templates in the bank, sampled broadly

in semantic and viewpoint space. Our paper

shows how a simple classifier like an SVM can use this high

dimensional representation to effectively recognition realistic

videos of complex human activities.

On this page, you will find downloads for our source

code, already processed versions of major vision

data sets, and a description about the method and the code in

some more detail.

Human motion

and activity is extremely complex. Most promising recent approaches

are based on low- and mid-level features (e.g.,

local space-time features, dense point trajectories, and dense 3D

gradient histograms). In contrast, the Action Bank™ method is a new

high-level representation of activity in video. In short, it embeds a

video into an "action space" spanned by various action detector

responses, such as walking-to-the-left, drumming-quickly, etc. The

individual action detectors in our implementation of Action Bank™ are

template based detectors using the action spotting work of Derpanis et

al. CVPR 2010. Each individual action detector correlation video

volume is transformed into a response vector by volumetric max-pooling

(3-levels for a 73-dimension vector); in our library and methods

there are 205 action detector templates in the bank, sampled broadly

in semantic and viewpoint space. Our paper

shows how a simple classifier like an SVM can use this high

dimensional representation to effectively recognition realistic

videos of complex human activities.

On this page, you will find downloads for our source

code, already processed versions of major vision

data sets, and a description about the method and the code in

some more detail.

News / Updates

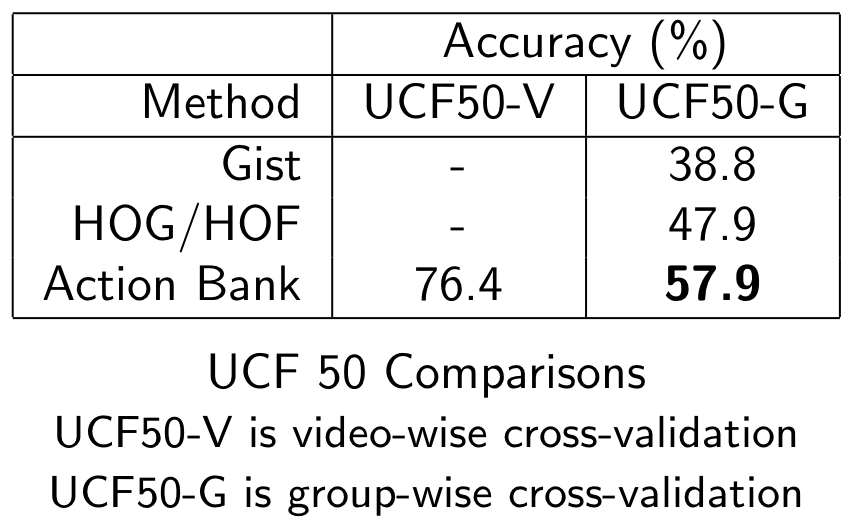

- 14 Sept. 2012 -- Posted the splits we used for the group-wise cross-validation on UCF50, since there is no official split posted and we want to facilitate apples-to-apples performance comparisons. You can download it here or at the link below.

- 25 June 2012 -- Action Bank wins Best Open Source Code Award 3rd Prize at CVPR 2012.

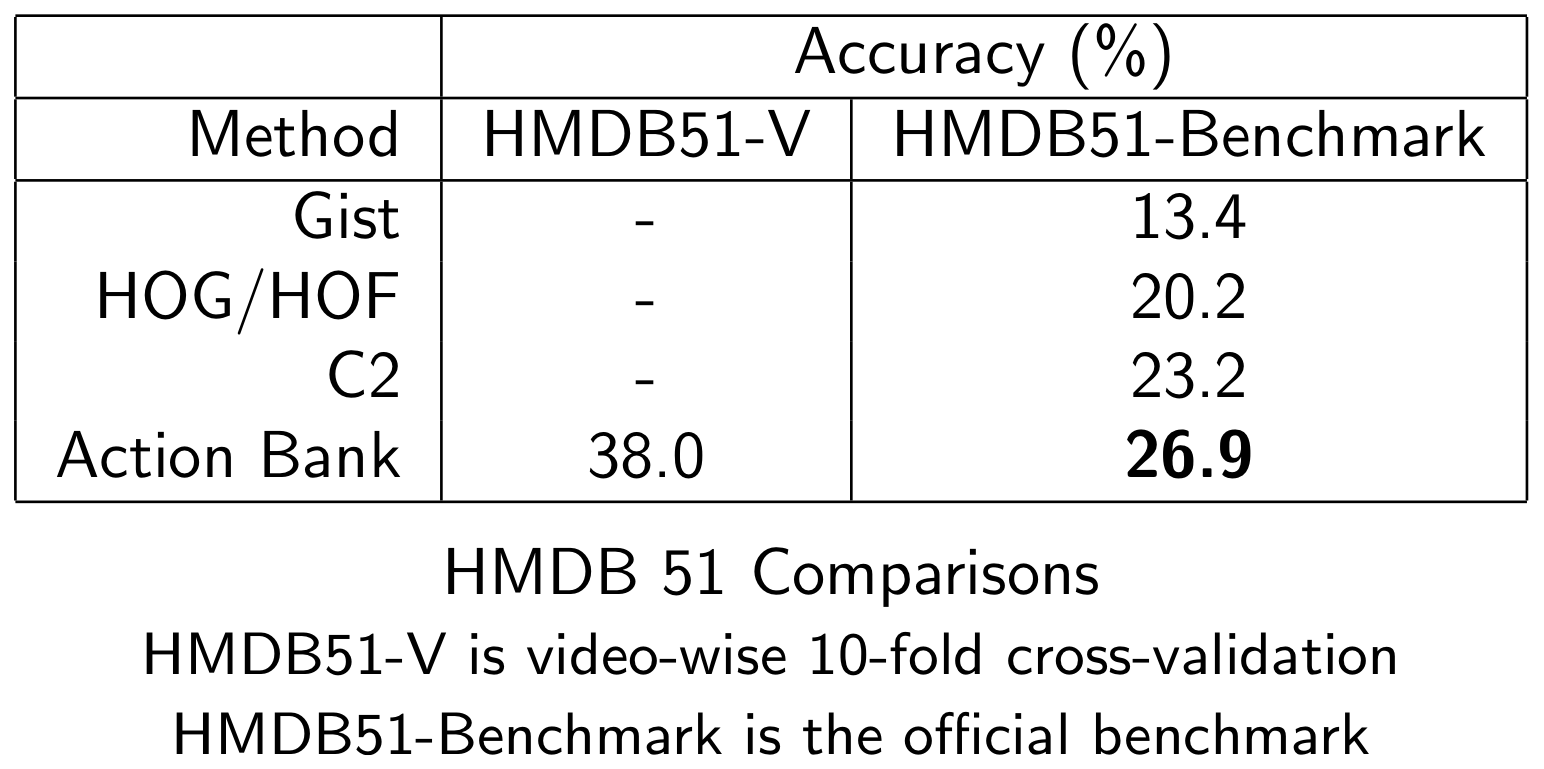

- 5 June 2012 -- Added HMDB51 comparative results.

- 4 June 2012 -- Some detailed help / answers to questions has been added to this page in response to feedback we've received.

- 31 May 2012 -- HMDB51 bank vectors are now available for download in Python and NumPy formats, and in 2 scales.

- 31 May 2012 -- UCF50 bank vectors are now available for download in Python and NumPy formats.

- 31 May 2012 -- A new PDF has been posted for the CVPR 2012 paper. This updated version fixes an error in our experimental evaluation on the HMDB51 data set, which reported results that were not directly comparable to those in the benchmark results, and clarifies the UCF50 experimental setting. We have noted this change in the pdf directly (the updated version will appear in IEEE Xplore, but the conference proceedings may have the older/incorrect version). We apologize for this confusion; our goal is to report the most appropriate comparative findings to the community. Note, the original reviewed paper had no HMDB51 results as the data set was not yet available.

Code / Download:

- The code is downloadable here. This download includes the (Python) source code for complete Action Bank™ feature representation as well as an example of svm-based classification, some tools (such as converting to a Matlab readable format), a thorough README with instructions on code use and data format, the full set of bank templates that was used in our CVPR 2012 paper, and scripts that produce the results from our CVPR 2012 paper (processed bank representations needed below).

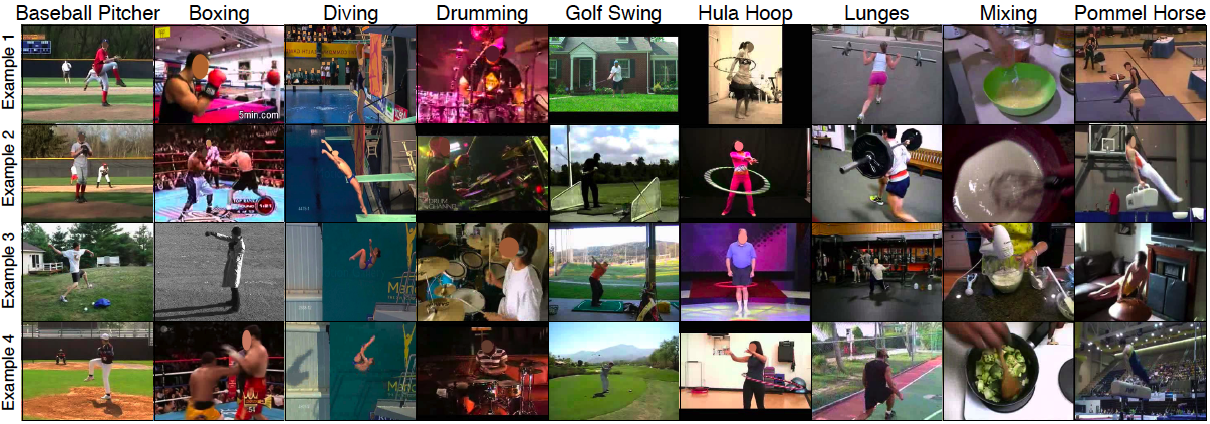

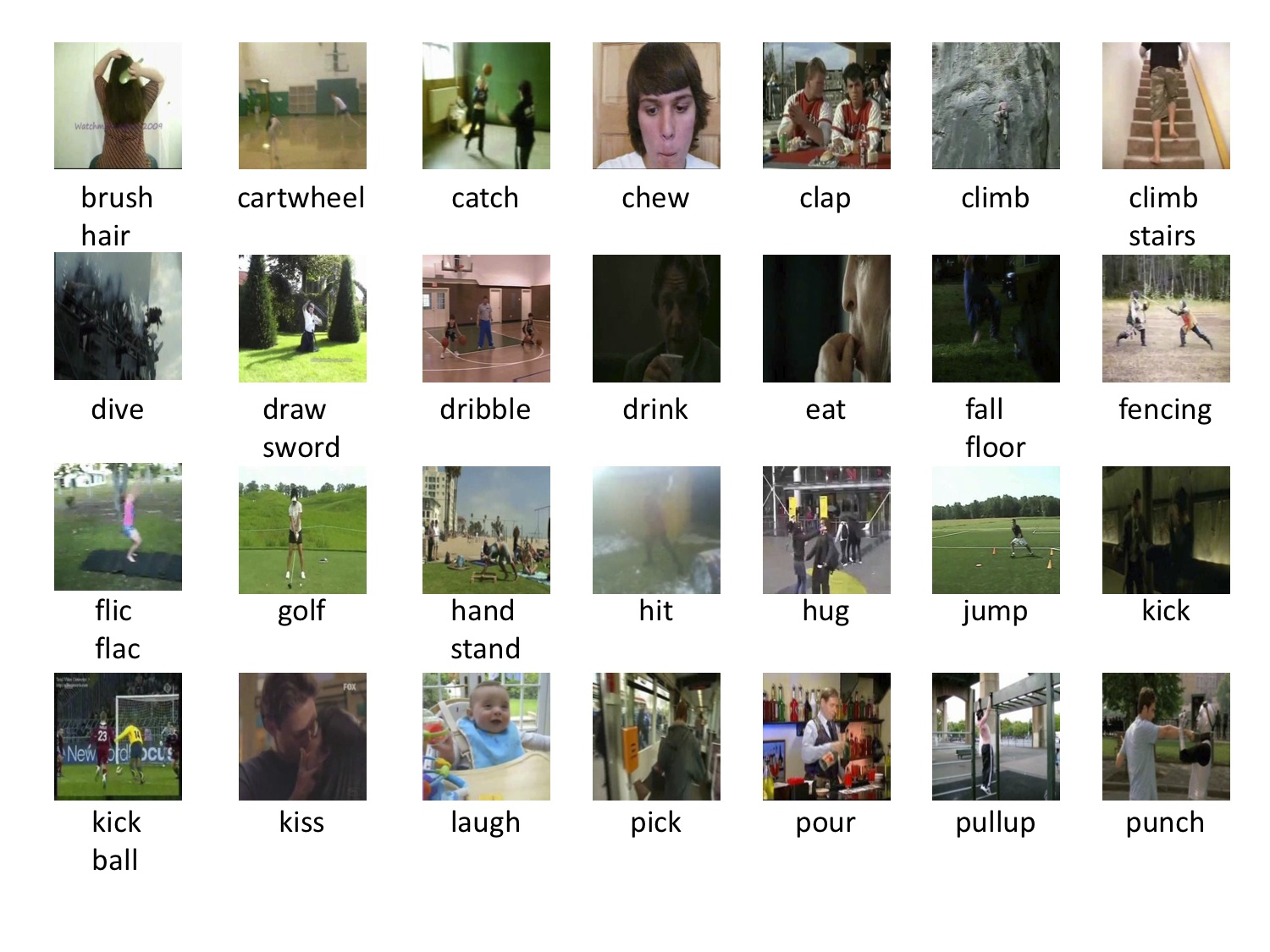

Action Bank™ Versions of Data Sets

| Dataset Name |

Dataset Thumbnail |

Raw Videos |

Action Bank™ Encoded Versions |

| KTH |

|

Download the original videos from here. |

1 Scale Python | Matlab |

| UCF Sports |

|

Download the original videos from here. |

2 Scales Python | Matlab |

| UCF50 |

|

Download the original videos from here. |

1 Scale Python | Matlab Group-wise cross-validation script Group-wise 5-fold splits for cross-validation observing video-group boundaries |

| HMDB51 |

|

Download the original videos from here. |

1 Scale: e1f1g2 Python | Matlab CVPR 2012 results computed from this above data; no differences in combining scales observed, but we provide the data anyway. 1 Scale: e2f1g2 Python | Matlab 2 Scales: e1f1g2 and e2f1g2 Python | Matlab Three-Way Min-Overlap Splits Script |

Benchmark Results

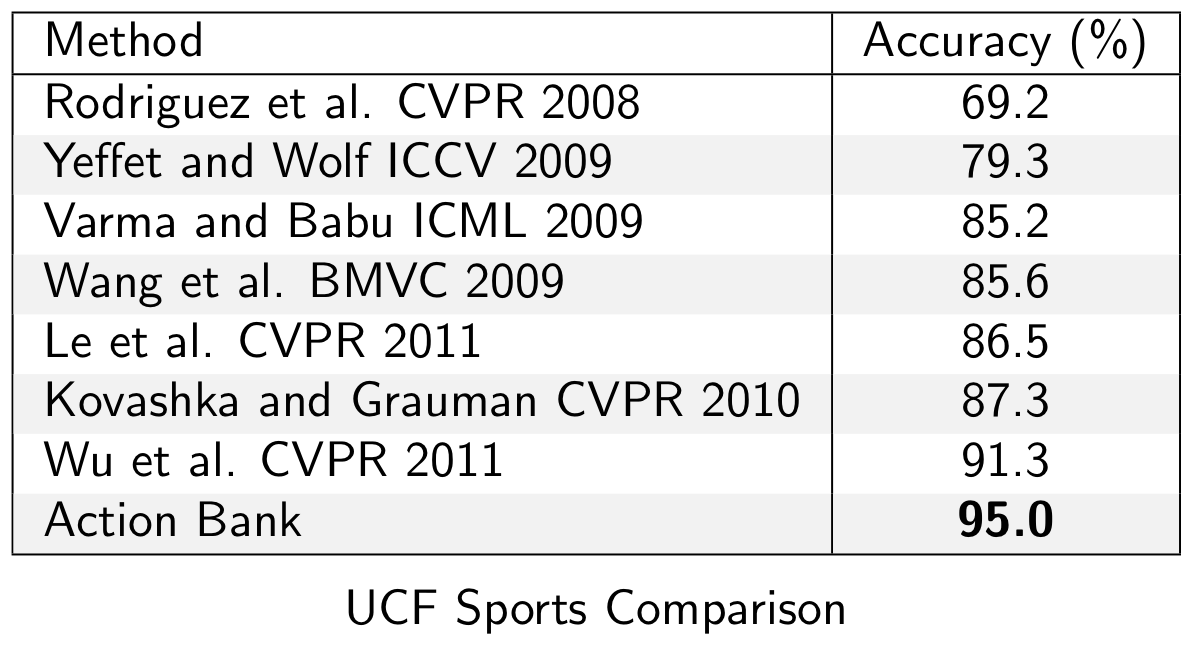

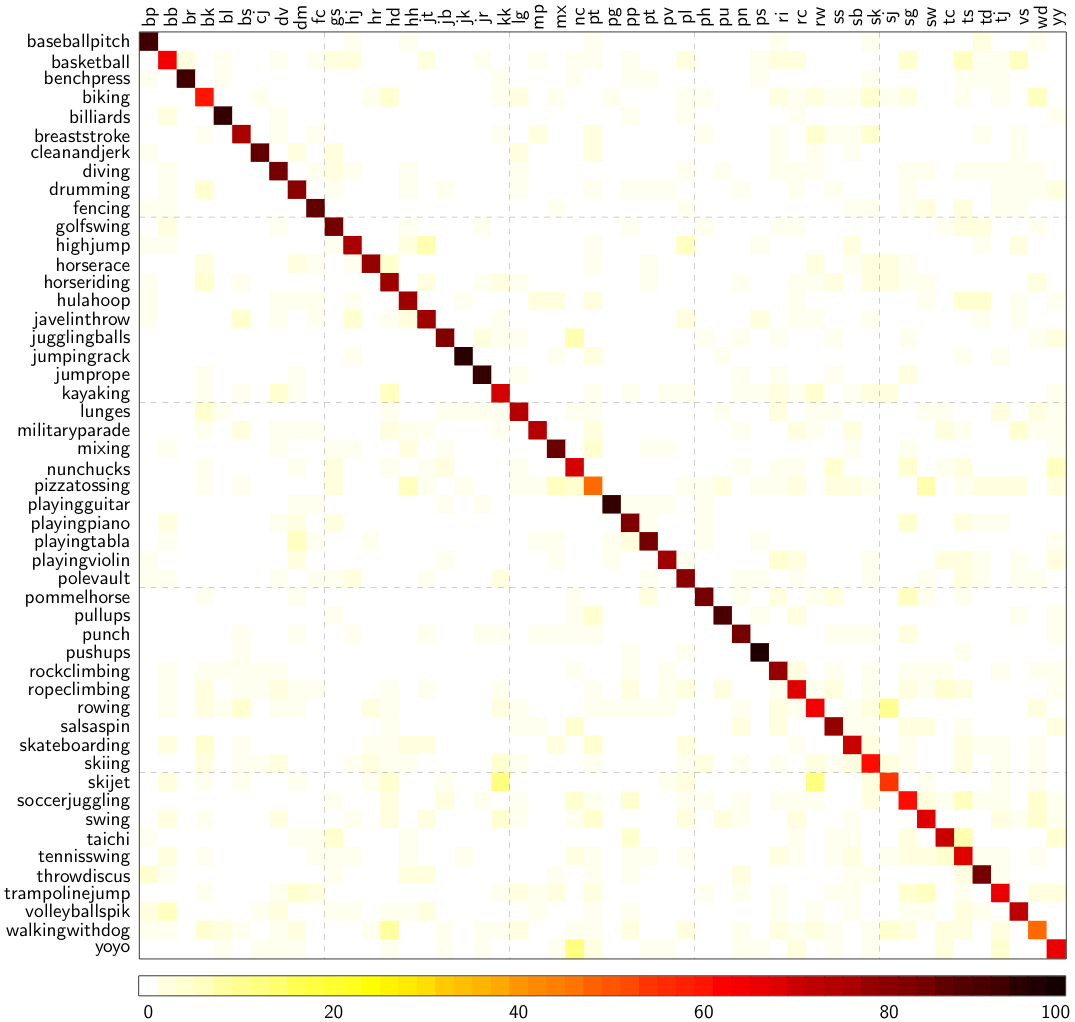

We have tested action bank on a variety of activity recognition data sets. See the paper for full details. Here, we include a sampling of the results. UCF Sports UCF 50 HMDB 51Publications:

| [1] | S. Sadanand and J. J. Corso. Action bank: A high-level representation of activity in video. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2012. [ bib | code | project | .pdf ] |

FAQ / Help

We try to provide some answers to frequent questions and help below in running the code and/or using the outputted banked vectors.- I am running the software on a video and it hangs; what's going on?

- I get a RuntimeWarning on divide by zero in spotting.py.

- The classify() function in ab_svm.py causes an AttributeError and does not work.

Question 1:

I am running the software on a video and it hangs; what's going on?

The most likely answer to this question is not that the system is

hanging but that the system is processing through the method, which

is relatively computationally expensive (especially in this pure

python form).

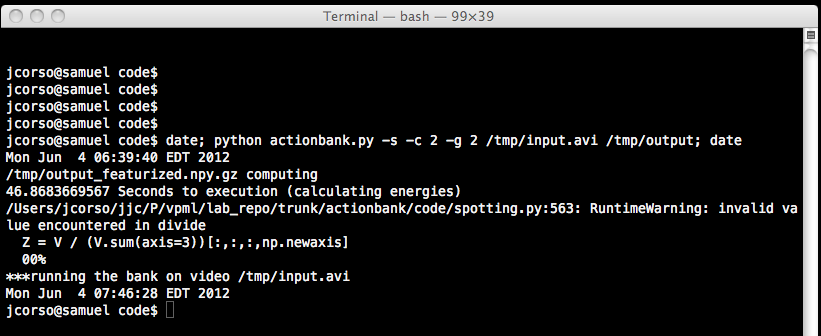

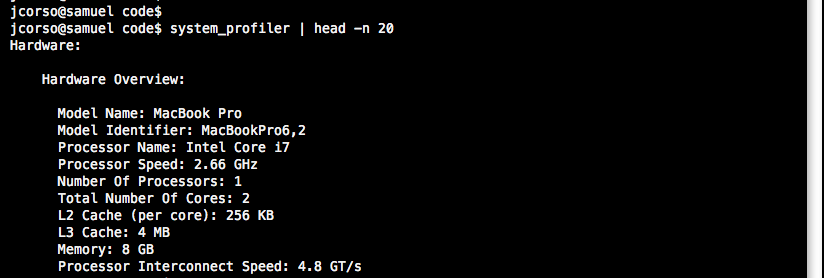

Here, I run through an example to give you an idea of what you should

see...

I am processing through the first video in the UCF50 BaseballPitch

class (named: v_BaseballPitch_g01_c01.avi). This video is 320x240 and

has 107 frames; it is not a big video. I copied and renamed it to

/tmp/input.avi

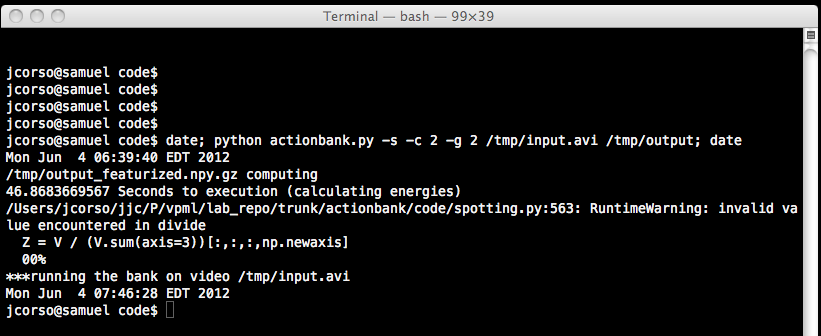

Now, from inside of the actionbank/code folder, I call Action

Bank on this single video with the command

python actionbank.py -s -c 2 -g 2 /tmp/input.avi /tmp/output

The -s means this is a single video and not a directory of videos. The -c 2 means use 2 cores for processing. The -g 2 means reduce the video by a factor of two before applying the bank detectors (but after featurizing).

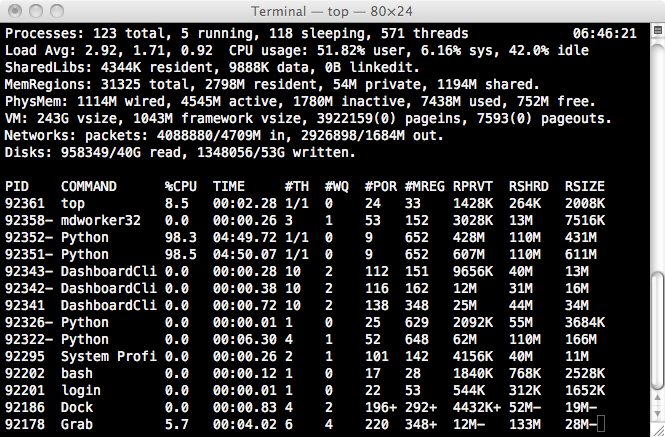

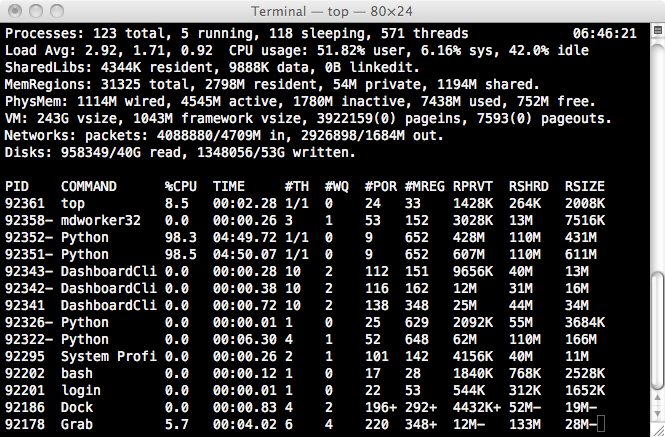

Now, the method will run and run... Here is where it may seem like it

is hanging. But, it's not. It applies each detector in parallel up

to the number of cores you tell it to use. See the output of the

system environment during the run.

You can probably go get a coffee now. Or go to sleep. For this one

video at 320x240 and about 7 seconds, at -g 2 the bank will

take about an hour to process (on my dual-core i7). See the

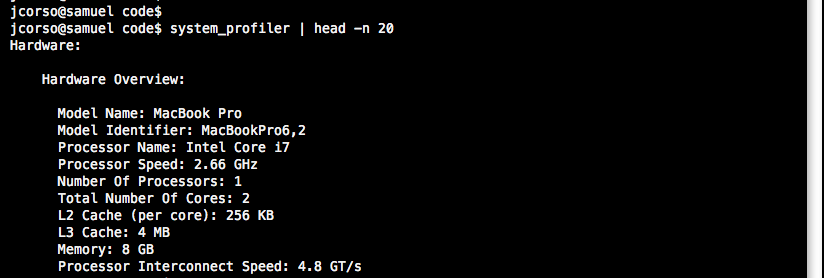

timestamps of running the command-below. The machine stats output is

below.

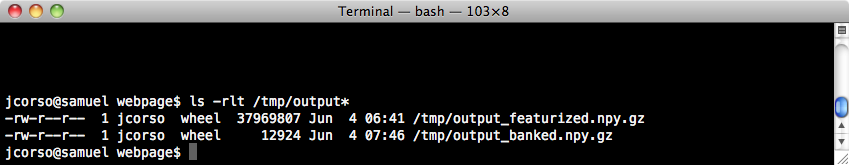

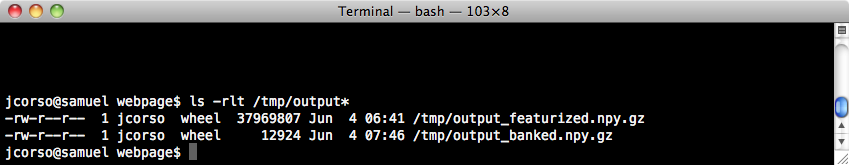

Once this is done, you will have two new files in the /tmp:

/tmp/output_featurized.npy.gz and

/tmp/output_banked.npy.gz, which are the outputs.

You can effectively discard the _featurized.npy.gz file; the bank

vector is in the _banked.npy.gz file. So, you can see that it takes

about two hours to process a relatively small video on a single core.

If you increase the number of cores, you will see a corresponding

decrease in speed (quite proportionate to the number of cores used).

We, for example, process on a Linux cluster with 12-cores per machine

and can process all of UCF50 in about 20 hours on 32 machines. But,

this is time-consuming we agree; so, we have provided the bank output vectors above for many data

sets. We are also working on speeding up action bank and will post

updated code here (the current code is pure python and not the most

efficient).

python actionbank.py -s -c 2 -g 2 /tmp/input.avi /tmp/output

The -s means this is a single video and not a directory of videos. The -c 2 means use 2 cores for processing. The -g 2 means reduce the video by a factor of two before applying the bank detectors (but after featurizing).

Question 2:

I get this runtime error when I run the code:

actionbank/code/spotting.py:563: RuntimeWarning: invalid value encountered in divide Z = V / (V.sum(axis=3))[:,:,:,np.newaxis]

This case means that there is no motion energy at all for a pixel in

the video, which is quite possible for typical videos. We

explicitly handle it in the subsequent lines of spotting.py by

checking for NAN and INF. I.e., disregard the runtime warning.

Question 3:

The classify function call in ab_svm.py gives an

AttributeError. For example, when I run ab_kth_svm.py, I get the

following error:

Traceback (most recent call last): File "ab_kth_svm.py", line 99, inres=ab_svm.SVMLinear(Dtrain,np.int32(Ytrain),Dtest) File "/home/foo/actionbank_v1_0/code/ab_svm.py", line 263, in SVMLinear res = svm.classify(testfeats).get_labels() File "/home/foo/epd-7.3-1-rh5-x86_64/lib/python2.7/site-packages/modshogun.py", line 21621, in __getattr__ = lambda self, name: _swig_getattr(self, SVMOcas, name) File "/home/foo/epd-7.3-1-rh5-x86_64/lib/python2.7/site-packages/modshogun.py", line 59, in _swig_getattr raise AttributeError(name) AttributeError: classify

This seems to be a change in the Shogun library interface. Our work

was performed with shogun version libshogun

(x86_64/v0.9.3_r4889_2010-05-27_20:52_4889). In newer

versions of shogun, classify is replaced with apply. Note, we have

not yet tested this in house and results may vary.

We also want to point out that the ab_svm.py module is included

as an example of how to use the action bank output for

classification. One can use other, preferred, classifiers or

platforms, such as Random Forests or Matlab, respectively.