Problem Set 2: DNA Sequence Alignment

- Bring in to class a stapled turn-in containing your written answers. In Problem Set 1, we required you to print out your normal Python source code. For Problem Set 2, you do not have to print out your Python source code unless the question (e.g., Question 1) specifically demands it. In addition to your names, please include your UVA IDs (e.g., mst3k) in big block letters at the top of your turn-in.

- Use our automatic adjudication service to submit a single Python file (a modification of ps2.py) containing all your code. You must define an author list containing the UVA IDs of both partners as its elements. Each partner must separate a copy (so that each partner has a grade in our system).

Collaboration Policy

Before starting to work with your partner, you should go through Question 1 yourself on paper. When you meet with your partner, check if you made the same predictions. If there are any discrepancies, try to decide which is correct before using Python to evaluate the expressions.

You and your partner should work together on the rest of the assignment. However, you will each turn in separate stapled documents containing your answers to the written questions. You should read the whole problem set yourself and think about the questions before beginning to work on them with your partner.

Remember to follow the pledge you read and signed at the beginning of the semester. For this assignment, you may consult any outside resources, including books, papers, web sites and people, you wish except for materials from previous cs1120, cs150, and cs200 courses. You may consult an outside person (e.g., another friend who is a CS major but is not in this class) who is not a member of the course staff, but that person cannot type anything in for you and all work must remain your own. That is, you can ask general questions such as "can you explain recursion to me?" or "how do lists work in Python?", but outside sources should never give you specific answers to problem set questions. If you use resources other than the class materials, lectures and course staff, explain what you used in your turn-in.

You are strongly encouraged to take advantage of the scheduled help hours and office hours for this course.

Purpose

- Practice programming with procedures, including using if, applications, and definitions.

- Become familiar with Lists and how they can be used to manage complex data.

- Understand and construct recursive definitions.

- Learn a little about genomics.

Optional Reading: Complete the first half of Unit 2 of Udacity Introduction to Computing.

Becoming Pros at Construcing Lists

- Explain why the fragment is not a valid Python expression; or,

- Predict what value the expression will evaluate to.

-

[1, 2] + 3

-

[1, 2] + [3]

-

[1] + [2, 3]

-

1 + [2, 3]

-

[1,2][0]

-

"dna"[1:]

-

([1,2][1:])[0]

-

len([1,2,3][1:])

-

len([1,2,3][0])

-

len([1,2,3][1:][1:][1:])

-

len([1,2] + [3,4])

-

len([1,2,3] + 4)

-

len([6,7,8] + [])

-

map(lambda (x) : x+4, [1,2,3])

-

map(lambda (x) : x, "rna")

-

[y*2 for y in [6,7,8]]

-

map(len, [ [1,2,3] , [4,5], [6] ])

For the remainder of this problem set, you should work with your partner. First, compare and discuss your answers on Question 1. If you and your partner have different answers, try to decide which is correct. Then, download the PS2 code so you can check your answers in the interpreter.

- ps2.py— A template for your answers. You should do the problem set by editing this file.

Genomics Background

For this problem set, you will develop procedures to solve some problems in genomics. The main problem we want to solve is known as the sequence alignment problem. It is used to compare genomes to understand how they might be related, both in terms of their function and how they might have evolved from common ancestors.

Most information in biology is encoded in DNA, a nucleic acid with two very useful properties for storing and processing information. The first is that DNA is very stable, so preserves information for a long time. This is why it was possible to sequence DNA from a woolly mammoth, even though it was over 300,000 years old.

Most information in biology is encoded in DNA, a nucleic acid with two very useful properties for storing and processing information. The first is that DNA is very stable, so preserves information for a long time. This is why it was possible to sequence DNA from a woolly mammoth, even though it was over 300,000 years old.

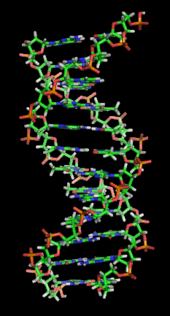

The second property is that DNA can be reliably replicated. DNA is composed of two chains of nucleotides that are intertwined in a double helix. The chains are connected by chemical bonds. Each nucleotide is one of four bases:

- Adenine (A),

- Cytosine (C),

- Guanine (G), and

- Thymine (T).

We will use the strings "A", "C", "G", and "T" to represent the four bases. You can test to see if two strings are equal with the double equal sign ==. For example:

>>> "A" == "A" True >>> "A" == "C" False

You should get these interactions:

>>> nucleotide_complement('A')

'T'

>>> nucleotide_complement('G')

'C'

>>> nucleotide_complement('C')

'G'

>>> nucleotide_complement('T')

'A'

We'll want to find the complement of an entire DNA sequence, not just a single nucleotide. Conveniently, Python allows you to treat lists and strings uniformly in many cases (a string is just a list of characters):

>>> "ant"[0]

'a'

>>> ["a","n","t"][0]

'a'

>>> "ant"[1:]

'nt'

>>> ["a","n","t"][1:]

["n","t"]

>>> if "a":

print 'one'

one

>>> if "":

print 'two'

>>> if ["a"]:

print 'three'

three

>>> if []:

print 'four'

>>> sequence_complement(['A','C','A','T'])

['T', 'G', 'T', 'A']

>>> sequence_complement([])

[]

>>> sequence_complement("GATTACA")

['C', 'T', 'A', 'A', 'T', 'G', 'T']

>>> sequence_complement("")

[]

(Hint0: the body of this procedure can be very short.)

(Hint1: Checking if argument == []: will not work as a base case for strings even though it works as a base case for lists. Try if len(argument) == 0: or if not argument: instead.)

Hamming Distance

The DNA copying mechanism is quite reliable, making about 1 error for every 100,000 nucleotides. The human genome (that is, all of a human’s genetic information) is about 6 Billion bases long, though, so that means every time a human cell (which contains two versions of the genome, one inherited from each parent) divides there would be about 120,000 errors. Fortunately, biology also has mechanisms for error-correction that fix most of the copying mistakes. After the error correction, the remaining number of mistakes is about one per 100 million bases copied. (For more on this, see DNA Replication and Causes of Mutation, Nature Education, 2008.)

The mutations that remain after the error-correction are mostly inconsequential, but some of them change the proteins the cell produces in ways that change the organism. Most changes are disastrous, and leave a cell that cannot produce a normal organism. Very occasionally, the copying mistakes turn out to be useful and lead to an organism that is more likely to survive and reproduce than organisms whose DNA does not have the mutations. As a result of natural selection, the new, mutated, version of the DNA will become the dominant version.

A very rough measure of the distance between two nucleotide sequences is to just count the number of positions where the bases are different. This is known as the Hamming distance. (The Hamming distance is very useful for error-correcting codes and cryptography, but, as we will see soon, not actually very useful in genomics.)

>>> count_matches(['A','C','A','T'], 'A') 2 >>> count_matches([], 'A') 0 >>> count_matches(['A','C'], 'G') 0(Note: This is not actually useful for defining Hamming distance, but this question will give you practice with an easier recursive procedure before trying Hamming distance.)

>>> hamming_distance([], [])

0

>>> hamming_distance(['A', 'C', 'A', 'T'], ['A', 'C', 'T', 'A'])

2 # last two elements do not match

>>> hamming_distance("erode", "geode")

2 # first two characters do not match

Edit Distance

In reality, not all of the copying mistakes are point mutations, however. It is also (relatively) common for there to be a copying error where one or more bases are skipped (a deletion), or added (an insertion) into the (mis-)copy. This makes it tougher to measure how closely related two sequences are. For example, a single base insertion at the beginning of a sequence makes every subsequent base a mismatch and the Hamming distance would be (nearly) the length of the rest of the sequence even though there was only a single copying mistake.

To account for this we need a more complex way of measuring distance, known as Levenshtein distance, or more simply, edit distance. The edit distance accounts for the three different types of copying errors that might occur: a base could be skipped in the copy (deletion), a base could be inserted between two of the original bases (insertion), or a base could be replaced with a different base (mutation). To understand how closely related two DNA sequences are, a biologists wants to know the number of copying errors that separate them. (In reality, if both sequences may have started from a common ancestor, the errors happened along the paths to both of the sequences. But, an insertion in one sequence is similar to a deletion in the other sequence, so it is still useful to just look for the number of copying errors between the two comparison sequences.)

The goal of sequence alignment is to find the minimum number of edits necessary to make two (or more) sequences identical. Biologists usually think of this as inserting gaps in the sequences to make them line up better (hence the name, alignment). We will view it another way.

Edit Distance Analogy — Spell Checking

Typographic errors (typos) and spell mistakes are surprisingly common. Let's consider three vaguely-common examples:

- You mean to type time but you mistakenly type timne.

- You mean to type great but you mistakenly type grat.

- You mean to type mover but you mistakenly type lover.

The situation is even worse in the modern era of cellphone autocorrect, but let's just consider the three examples above. In some sense, all of them are "off by one letter". The first features a mistaken insertion (of n). The second features a mistaken deletion (of e). The third features a mistaken replacement (m was replaced by l). DNA replication can make many of the same types of mistakes, so we want to be able to compute the number of "one letter spelling mistakes" between two words. That's exactly the Levenshtein distance!

Let's look at another example. Suppose we are trying to type great but we make mistakes. The following words increasingly far from what we wanted:

- great has distance 0 — it's what we wanted!

- greaty has distance 1 — it has one insert.

- grety has distance 2. You could either say that we inserted the y and deleted the a, or you could just say that the last two letters are wrong and have to be replaced.

- gr has distance 3 — we've deleted three letters!

- gweatxyz has distance 4 — we've replaced the second letter and have inserted three more at the end.

- "ACAT"

"ACA" - "AACCT"

"CCCCG" - "GATTACA"

"GACACA" - "ACAT"

"C" - "AAAAAAAAAAAAAAAAAA"

"AAAAAAAAAAAAAAAAAA"

Edit Distance Computation — Breaking Down the Problem

As usual in computer science, to solve difficult problems we like to break them down into smaller pieces. Since edit distance involves sequences of letters (i.e., English words or DNA bases), a smaller problem would just be a smaller sequence of letters — a smaller word! Let's see if we can define the edit distance of two words in terms of the edit distance of two smaller words.

Consider the following puzzling task. Suppose I want you to compute the edit distance between great and another word, but I don't tell you all of the other word! Instead, I only tell you that it begins with an r... and has at least one letter. Depending on the rest of that second word, the edit distance could be as low as 1. Example:

- If the second word is reat, the first letter has been deleted, so the edit distance is 1.

- If the second word is rgreat, a single additional letter has been inserted, so the edit distance is 1.

- If the second word is rreat, the first letter has been replaced, so the edit distance is 1.

Suppose we are given to words, a and b, with letters a1 a2 a3 ... an and b1 b2 b3 ... bm. For the purposes of illustration, suppose word a is the "right answer" we were trying to type, and word b is something we "bashed out" that may have a few typos. Then the edit distance can be computed as follows:

- If the first word is empty (i.e., if n is zero), then you have to insert all of the characters in the other word to make them match, so the edit distance is m.

- If the second word is empty (i.e., if m is zero), then you have to insert all of the characters in the first word to make them match, so the edit distance is n.

- Otherwise, return the smallest number given by these four cases:

- (Perhaps you forgot to type the first letter of a.) Compute the edit distance between a2 a3 ... an and b1 b2 b3 ... bm and then add 1 (to account for the letter a1 that you forgot).

- (Perhaps you mistakenly typed b1 but you really should not have.) Compute the edit distance between a1 a2 a3 ... an and b2 b3 ... bm and then add 1 (to account for the mistaken insertion of b1).

- (Perhaps you typed the wrong letter in this sport.) If b1 is different from a1, then compute the edit distance between a2 a3 ... an and b2 b3 ... bm and add 1 (to account for typing b1 when you meant to type a1).

- (Perhaps you got this letter right!) If b1 is equal to a1, then compute the edit distance between a2 a3 ... an and b2 b3 ... bm and add nothing.

Optional: Edit Distance Computation — Alternate Problem Description

The notion of edit distance may not be obvious the first time you read about it. Here we provide an alternate, independent description of the algorithm. Seeing multiple presentations may make it easier to grasp.

An algorithm for finding the edit distance between two sequences, s and t, is to find the minimum of all possibilities. Consider the sequence as lists of elements, s is (s0 s1 s2 s3 ... sn) and t is (t0 t1 t2 ... tm) (note that we cannot assume the number of elements in each sequence is the same). So, to start we have three options for the first element:

- the base s0 was inserted in s. This has edit cost of 1 for the insertion, and means the sequences before the insert were (s1 s2 ... sn) and (t0 t1 t2 ... tm).

- the base t0 was inserted in t (or equivalently, there was some base x that was before s0 which was deleted). This has edit cost of 1 for the deletion, and means the sequences before the deletion were (s0 s1 s2 ... sn) and (t1 t2 ... tm).

- there was no insertion of deletion. This means s0 and t0 are aligned. If they match, the edit cost is 0 since they are the same no edit is needed. If they are different, the edit cost is 1 since there was a point mutation. After matching s0 and t0, the remaining sequences are (s1 s2 ... sn) and (t1 t2 ... tn).

We want to pick the option that leads to the minimum total edit distance. To know which is best, though, we need to know the edit distance of the sequences that remain after the choice. This is a recursive definition!

We want to find the value of the minimum of the three options:

- 1 + edit distance between (s1 s2 ... sn) and (t0 t1 t2 ... tm)(insert)

- 1 + edit distance between (s0 s1 s2 ... sn) and (t1 t2 ... tm)(delete)

- edit distance between (s1 s2 ... sn) and (t1 t2 ... tm) + 0 (if s0 matches t0) or 1 (if s0 does not match t0).

Edit Distance Computation — Programming Problem

Now you must implement an algorithm to compute the edit distance.

As a helpful hint, note the Python has a built-in min function that takes any number of arguments and returns the value of the smallest one:

>>> min(5,7) 7 >>> min(5,1,7) 1 >>> x = 3 >>> min(5,7,x) 3

Question 8: Define a procedure, edit_distance, that takes as input two lists (or two strings — your code will work for both) and outputs the minimum edit distance between those sequences. Try this on your own first, but it is pretty tricky. Don't forget to take advantage of the available help.

Try your procedure on the "great" examples above. You can try it on the longer example also, but unless you were extraordinarily clever, it will probably not finish executing.

Memoizing

We have a serious problem if our edit_distance procedure takes too long to execute on strings like "AAAAAAAAAAAAAAAAAA" (10 bases), since proteins contain hundreds of amino acids (each of which requires 3 bases to encode). If we want to solve any interesting problems in biology we need a edit_distance procedure that is much faster than this one.

Why is the edit_distance procedure you defined in the last Question so slow? (Think about this yourself first before reading on.)

The reason the recursive definition of edit distance as described is so slow is because it is doing so much redundant work. For example, supposed were are computing edit_distance("ACT","ACT"). Using the three options, we want to find

min( (1 + edit_distance("CT","ACT")),

(1 + edit_distance("ACT","CT")),

(0 + edit_distance("CT","CT")))

To evaluate this application expression, the interpreter needs to evaluate all the subexpressions. The first operand subexpression is (1 + edit_distance("CT","ACT")) which requires evaluating edit_distance("CT","ACT"). As before, we need to find the minimum of the three options. In this case,

min( (1 + edit_distance("T","ACT")),

(1 + edit_distance("CT","CT")),

(1 + edit_distance("T","CT")))

Note that one of these, (1 + edit_distance("CT","CT")) is identical to the third option for the first edit_distance call. But, the interpreter doesn’t know that. It just follows the evaluation rules, re-evaluating the same expression every time it appears. In evaluating a big edit_distance application, there are quadrillions of identical evaluations of edit_distance, all of which are redundantly evaluated by the evaluator.

One solution is to store the results of previous evaluations, so they don’t need to be recomputed. This is known as memoizing.

Memoizing is fairly easy to do in many programming languages. Python provides a way to "decorate" functions and indicate that they should be memoized. We have provided code to do so below (and in comments in ps2.py). It is not necessary for you to understand how memoize is defined (indeed, it uses some special forms that we have not yet introduced). The memoize procedure takes as input a procedure and outputs a new procedure. The output procedure is similar to the input procedure, except whenever it is evaluated it store the result in a table. If it is re-evaluated on the same inputs, instead of needing to evaluate the full function, it looks up the value from the previous evaluation in the table and evaluates to that value right away.

import sys

sys.setrecursionlimit(25000)

def memoize(your_function):

cache = { }

def memoized_function(*argument):

if argument not in cache:

cache[argument] = your_function(*argument)

return cache[argument]

return memoized_function

# This @memoize decorates your edit_distance procedure

# with this notion of memoization.

@memoize

def edit_distance(a,b):

# ...

In your ps2.py file we have included some (abridged) actual gene data: a normal "mus musculus apolipoprotein E" gene (call it apoe) and the E4 variant of it associated with Alzheimer's disease.

An individual who has the E4 variant in both copies of their genome is about 30 times more likely to develop Alzheimer’s disease by age 75, compared to individuals who have both copies of their genome with the normal variant. With no copies of the E4 variant, someone has a 7% chance of developing Alzheimer’s; with one E4 variant, there is a 14% chance; with both copies as E4 variant, the chance of developing Alzheimer’s by age 75 is around 80%.

Try to compute the edit_distance between apoe_normal and apoe_bad. Without memoization, you'll probably have to terminate the Python process.

Question 9 (Extra Credit): Augment your edit_distance answer using the memoization decoration and figure out the edit distance between apoe_normal and apoe_bad. Write that answer down on your on-paper turn in for +1 point of extra credit on this problem set. This is extra credit because it involves a number of concepts we have not explicitly covered in class yet — but we will later!