Data-Driven 3D Primitives for Single Image Understanding

People

Abstract

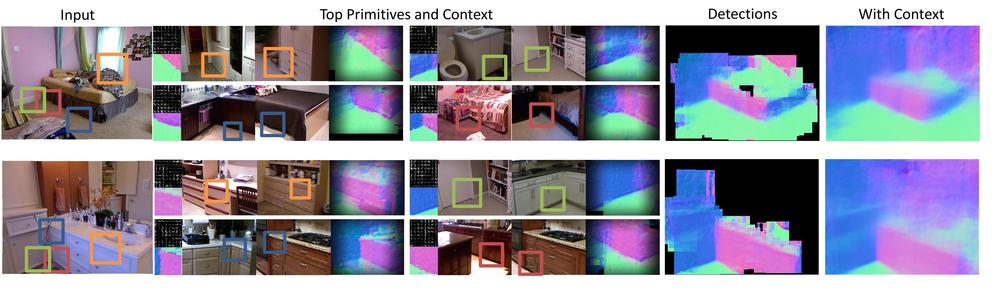

What primitives should we use to infer the rich 3D world behind an image? We argue that these primitives should be both visually discriminative and geometrically informative and we present a technique for discovering such primitives. We demonstrate the utility of our primitives by using them to infer the 3D surface normals given a single image. Our technique substantially outperforms the state-of-the-art and shows improved cross-dataset performance.

Paper

|

|

ICCV Paper (pdf) Addendum: results on standard train/test (pdf) Poster (pdf) Citation |

Extended Results

We are providing a number of documents as supplemental material:- Selected Art Examples [3.9MB]

-

Additional 3D Primitive Examples [pdf, 3.4MB]

-

Additional results on NYU v2 [pdf, 9.2MB]

-

Additional cross-dataset results [pdf, 7.2MB]

-

Statistical analysis of the results [pdf,279KB]

Code/Data

We will provide two versions of the code. One provides a black-box version of the system that can be easily plugged into other scene understanding tasks. The other is the version used internally that includes training code.We also have precomputed results for many indoor scene understanding datasets. Please contact David Fouhey for these.

- Prediction Only (Now Available)

[Code (2.9MB .zip), version 1.02, updated 8/14/2014]

[Data (926MB .tar.gz)]

[Model for standard train/test (1.5GB zip)]

This is a streamlined version of the prediction code and a model pre-trained on the NYU v2 dataset (be careful which one you download -- one is trained on the standard train/test split, the other is not!).

This can also be used as a feature in other vision tasks. - Training Code (New!)

Funding

This research is supported by:

- NSF Graduate Research Fellowship for David Fouhey

- NSF IIS-1320083

- NSF IIS-0905402

- ONR-MURI N000141010934

- A gift from Bosch Research & Technology Center

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.